8 minutes

How I automated my website, the hugo way

Introduction

As you’ll have seen in my post here . I have migrated my blog over to the popular static CMS, hugo. With this migration, I also decided to migrate my webserver to somewhere non-internet facing to move away from my recently-despised host (OVH).

I run a private Kubernetes cluster in my home lab, and with Hugo being very lightweight I figured I may as well host it at home, using cloudflare tunnels to handle the routing and SSL. Plus caching and offline mode should I do something dumb and kill my home cluster / internet connection.

This post is a detailed run-through of how I set up the infrastructure side.

Webserver selection

The first thing I had to decide was what web server to front my Hugo website with. Hugo generates a bunch of static content files in HTML/CSS/JS etc, that you can then host with any webserver you like.

Examples could be:

- express.js

- apache2

- nginx

- caddy

- python SimpleHTTPServer

- hugo server

I already use a popular ingress controller in my K8’s stack, nginx-ingress, and have always been a fan of how lightweight yet powerful nginx is. Apache is quite bloated and the other options involved more config work than I really wanted to do and maintain.

I figured, why not double down on nginx, and have nginx-inception!

Architecture

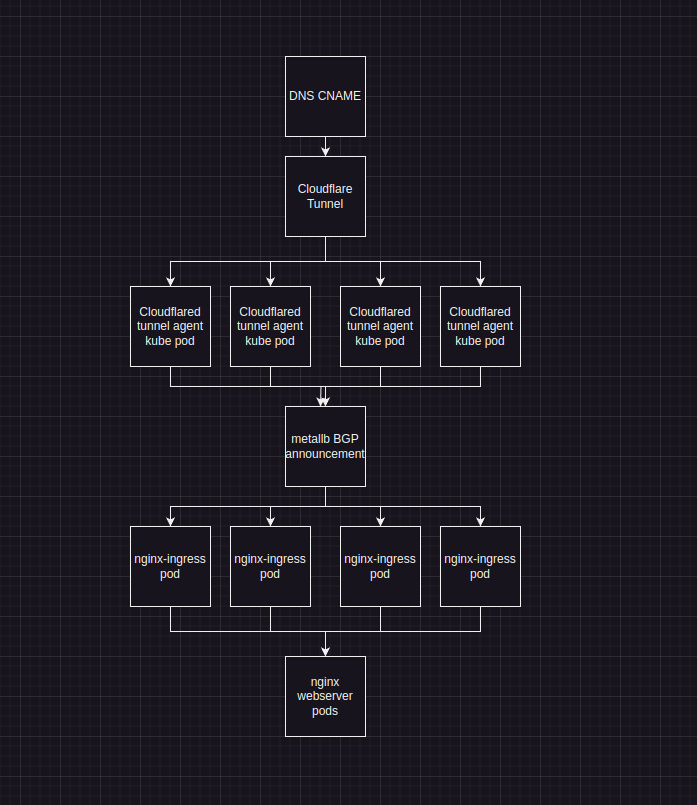

Here is a simple diagram of the routing involved:

Working from the top down, this is how it hangs together:

DNS CNAME / Cloudflare Tunnel When using cloudflared tunnels, you are given a unique DNS record name for the tunnel itself. This allows you to generate a CNAME record for your host (in my case, ainsey11.com) to the CNAME cloudflare gives you.

When routing to the CNAME, cloudflare then sends the traffic to one of the cloudflared daemons connected to that tunnel. The cloudflared daemon then has a configuration file, which tells it how to handle routing for the tunnel.

Cloudflared Tunnel Cloudflare have this wonderful thing called tunnels, as mentioned in my previous section. Tunnels allow you to run services on a private network that is not accessible from external internet, and route them via the cloudflare network.

You can install the daemon on a host directly, or in a docker container. You can also run multiple daemons for one tunnel, allowing you to implement a nice redundant setup. You can read more about it here

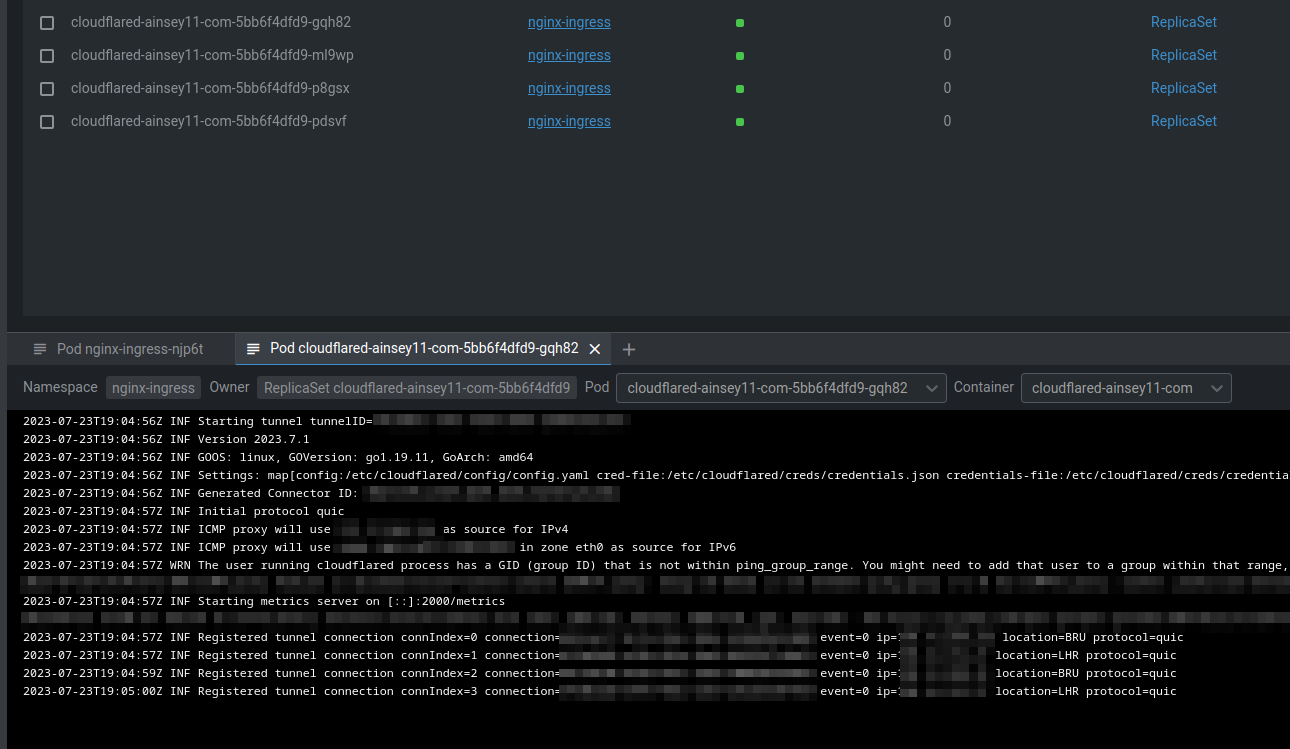

In my home lab, I have multiple (6 in fact), kubernetes worker nodes. To make best use of these, and to allow for things such as patching, or maintenance. I wrote up a kubernetes manifest that turns the cloudflared docker image, into a Kubernetes ReplicaSet.

The pods mount the credentials for the tunnel from my kubernetes secrets provider, and their configuration via a kubernetes configMap item.

Here is a snippet from my manifest:

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: cloudflared-ainsey11-com

namespace: nginx-ingress

spec:

selector:

matchLabels:

app: cloudflared-ainsey11-com

replicas: 4

template:

metadata:

labels:

app: cloudflared-ainsey11-com

spec:

containers:

- name: cloudflared-ainsey11-com

image: cloudflare/cloudflared:latest

resources:

requests:

cpu: '20m'

memory: '128Mi'

limits:

memory: "512Mi"

cpu: "1"

args:

- tunnel

- --config

- /etc/cloudflared/config/config.yaml

- run

livenessProbe:

httpGet:

path: /ready

port: 2000

failureThreshold: 1

initialDelaySeconds: 10

periodSeconds: 10

volumeMounts:

- name: config

mountPath: /etc/cloudflared/config

readOnly: true

- name: creds

mountPath: /etc/cloudflared/creds

readOnly: true

The docker image includes a useful liveness probe, so the cluster can warn me when something goes awry with the pod, or automatically restart it if I need it to.

Once the pods launch, I can see the pods spawn on my nodes, and the logs indicate a successful registration to cloudflare:

Now when traffic hits the CNAME, cloudflared will choose one of these pods to send the traffic to. Each pod has a configuration file telling it what to do from there.

In my case, it looks a little like this:

# Name of the tunnel you want to run

tunnel: XXXXXX-XXXXXX-XXXXX-XXXXX-XXXXXXXXX

credentials-file: /etc/cloudflared/creds/credentials.json

metrics: 0.0.0.0:2000

no-autoupdate: true

ingress:

- hostname: "ainsey11.com"

service: https://nginx-ingress:443

originRequest:

connectTimeout: 30s

timeout: 30s

originServerName: ainsey11.com

- service: http_status:404

In this config you can handle multiple domains via one tunnel, and send the traffic to whichever service you like. In my case I’m sending this back out to my nginx-ingress service (more on this later)

Nginx Ingress / BGP As previously mentioned, I use the popular nginx-ingress to handle ingress traffic into my K8’s cluster. I also use another popular service called MetalLB.

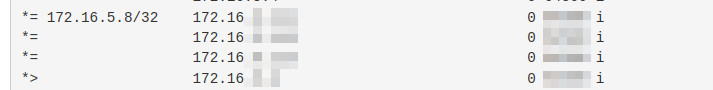

MetalLB uses the BGP protocol, to announce IP addresses in a subnet I define, to my home router. Every node in my cluster runs a BGP announcement pod, which announces the agreed IP for that service, via BGP. This means that any one of my nodes can route traffic destined for that IP, allowing further fault tolerance.

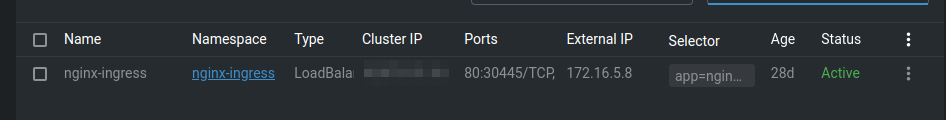

As nginx-ingress uses a LoadBalancer to announce its existence, this is picked up by metalLB and announced via BGP to my router. In my case, the IP 172.16.5.8 is announced:

And in Kube:

This setup allows me to interface directly with my nginx-ingress pods (one per host) from my LAN, which can be quite useful for exposing services, I often pair this with external-dns and my BIND9 DNS cluster, to automatically announce new services into my home network.

The BGP part isn’t important here, as that is more for accessing nginx-ingress from outside of the cluster. But as we are using the cloudflared-tunnel pods, we can keep the traffic between the cloudflare pods, and nginx-ingress inside our cluster.

So, to expose my hugo website, I launch a normal nginx pod, mounting the web files as a volume mount:

containers:

- name: ainsey11

image: nginx:latest

imagePullPolicy: IfNotPresent

resources:

requests:

memory: "128Mi"

cpu: "100m"

limits:

memory: "2048Mi"

cpu: "2"

ports:

- containerPort: 80

volumeMounts:

- name: ainsey11-com

mountPath: /usr/share/nginx/html

and then a service to allow inbound routing to the pod:

apiVersion: v1

kind: Service

metadata:

name: metallb-ainsey11-website

namespace: public-services

spec:

selector:

app: ainsey11

svc: website

type: LoadBalancer

ports:

- port: 80

targetPort: 80

finally, my nginx-ingress configuration to tell the nginx-ingress operator, that I want to have it route traffic to my pod, for the ainsey11.com domain:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ainsey11-website-ingress

namespace: public-services

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/proxy_ssl_server_name: "on"

nginx.ingress.kubernetes.io/proxy_ssl_name: "ainsey11.com"

spec:

tls:

- hosts:

- ainsey11.com

secretName: ainsey11-com-tls

rules:

- host: ainsey11.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: metallb-ainsey11-website

port:

number: 80

To test this out, I then did a simple check of changing my hosts file, to route ainsey11.com to my BGP announced IP. Upon doing so I could access my website.

I removed this, and then updated my DNS records to contain the cloudflare CNAME record. Waited for the TTL to expire, and tested my website, voila! it worked.

Pipeline configuration

Since my hugo content and configuration is stored in my git server, I may as well make usage of my CI/CD pipeline service.

For this, I run Drone , again inside my Kubernetes cluster. It’s a nice and simple pipeline service that meets my needs without being excessive on resource consumption.

Here is the process flow:

Changes made in local copy ↓

run my local to view changes with hugo server --publishDrafts ↓

Commit changes to git repo ↓

Push commit to git repo ↓

Pipeline starts ↓

Pipeline updates my theme via git submodule commands ↓

Pipeline runs a hugo build to generate the static content ↓

Pipeline copies the files to my ZFS storage server via rsync ↓

ZFS Replication syncs the files to my other storage servers within my network ↓

Nginx serves the files with hot-reloading

Here is a short snippet from my .drone.yml configuration file to serve as an example:

- name: Version check

image: klakegg/hugo:ext-alpine

commands:

- echo "Checking Hugo version."

- hugo version

- name: Build

image: klakegg/hugo:ext-alpine

commands:

- echo "Building site."

- ls -la

- apk update

- apk add git

- git submodule update --init --recursive

- hugo --minify --destination /drone/src/build

- name: Upload to ZFS Pools

image: alpine:latest

commands:

- apk update

- apk add openssh-client rsync

- mkdir -p ~/.ssh

- eval `ssh-agent`

- echo "$SSH_KEY" > ~/.ssh/id_rsa

- chmod 600 ~/.ssh/id_rsa

- ssh-add ~/.ssh/id_rsa

- echo -e "Host *\n\tStrictHostKeyChecking no\n\n" > ~/.ssh/config

- #RSYNC COMMAND GOES HERE

environment:

SSH_KEY:

from_secret: drone_ssh_key

Final thoughts

It’s early days for me and my new friend, Hugo. However I’ve found setting it up to be an enjoyable process. Writing this post was my first lengthy content via Hugo and I must say, it’s been quite nice to use familiar tools such as VSCode to do my content generation.

I’m much happier with my content being hosted at home. I can control access, resources and routing using my existing services and tools, whilst still allowing internet readers to view my website. Hugo is so much faster than wordpress and has some really nice features that come with it. I can scale it easily inside kubernetes too!

Stay tuned for some more posts coming, now I’m not stuck on a horrid blogging platform I feel a bit more of a desire to write.

Ainsey